A short history of the National Institute for Standards and Technology (Part Two)

- Harry Law

- Jun 29, 2023

- 9 min read

This post is the second in a two part series looking at the history of NIST. It focuses on the period 1950-present and the incarnation of the NIST as we know it.

In the last post, we left the National Bureau of Standards as the 1940s were drawing to a close. The Bureau, which would eventually become NIST, had pushed forward the boundaries of science and driven a programme of standardisation across American industry. It had taken on railways and radios, buildings and beacons for aeroplane landing systems. The agency had weathered recessions and wars, but now faced a different sort of test.

First, in 1950, a new Organic Act (the legislation that determined the Bureau’s role and focus) was passed. While it did not meaningfully change its operations nor philosophy, it is worth reflecting on for what it did not include. The new act rejected a 1945 request from Bureau Director Lyman Briggs to widen the mandate of the organisation to include “the prosecution of basic research in physics, chemistry and engineering to promote the development of science, industry and commerce." While this approach might seem in-line with the organisation’s remit at first glance, the core difference is that this ‘basic research’ function would not be directly tied to any overarching goal of standardisation or testing.

The proposed directorate did not manifest, and the goals of the organisation remained unchanged. That sameness, however, had seen the Bureau drift closer to the U.S. military, with former NIST researcher Elio Passaglia estimating that in 1953 “85 percent of the work was for other agencies, most of it for the military.” In response, a government report in the same year by a committee headed by Mervin Kelly of Bell Laboratories argued that its basic standards mission had shrunk, and the Bureau was in danger of becoming an arm of the military. Following the publication of the report, over 2,000 staff (a significant portion of its employees) were transferred from the agency to the U.S. armed forces.

The second challenge was about how best to support, and respond to, the rise of computing technologies. Computerisation was the watchword of the 1950s, as machines such as the University of Pennsylvania’s ENIAC, completed in 1945, proved the concept underpinning general-purpose digital computers. In the early years of the decade, the Bureau built the Standards Eastern Automatic Computer (SEAC), the first internally programmed digital computer. Weighing over 3,000lbs or 1360 kg, the design was based on EDVAC, a sibling machine developed by the University of Pennsylvania similar in scope to its ENIAC project. With a speed of 1 megahertz and 6,000 bytes of storage, SEAC counted the 1957 production of the world's first digital image amongst its achievements. Using the computer, the agency partnered with the Census Bureau to develop the Film Optical Sensing Device for Input to Computers (FOSDIC), a machine capable of reading 10 million census answers per hour. Amidst the success of SEAC, the Bureau’s scientists debunked the myth of commercial additives improving battery performance, a controversial claim that led to high-level investigations but ultimately vindicated its testing methods.

In the 1960s, as the organisation moved from Washington D.C. to its new home in Gaithersburg, the Bureau published the Handbook of Mathematical Functions. The book, which was estimated to be cited every 1.5 hours of each working day by the 1990s, contained over 1,000 pages of formulas, graphs, and mathematical tables written partly in response to the mass adoption of computing technologies. Away from computer science, other notable projects in the decade included an effort to improve the reliability of cholesterol tests (understood to be off by as much as 23 per cent in 1967), the construction of the NIST Center for Neutron Research, and efforts to study the elusive particles known as free radicals. It was, by the Bureau’s standards, a quiet decade.

Against the backdrop of economic turmoil of the 1970s, which once again put pressure on the agency’s composition and budget, the Bureau turned with renewed focus to its core mission: to devise and revise measurement standards at the forefront of science and technology. It began the decade with the construction of the ‘topografiner’, an instrument for measuring surface microtopography. The tool, which could map the microscopic hills, valleys, and flat areas on an object's surface, enabled researchers to better understand how these characteristics determine properties such as how a material interacts with light, how it feels to the touch, or how well it bonds with other materials. In keeping with a focus on building tools at the cutting-edge of measurement science, the agency demonstrated a new thermometer based on the idea that the ‘static noise’ created by a resistor changes in tandem with the temperature of a particular substance. Completing the trifecta of new measurement processes was a 1972 effort to measure the speed of light at 299,792,456.2 +/- 1.1 metres per second, a value that was one hundred times more accurate than the previous recording.

NIST and NASA collaborated to manufacture standard reference material (SRM) 1960,

also known as “space beads.” Source: NIST

This dynamic, of science through measurement and measurement through science, continued as the agency entered the 1980s. Researchers made billions of tiny polystyrene spheres in the low-gravity environment of the Challenger space shuttle during its first flight in 1983. The spheres, known colloquially as ‘space beads’, were made available as a standard reference material for calibrating instruments used by medical, environmental, and electronics researchers. As NIST puts it: “The perfectly spherical, stable beads made for more consistent measurements of small particles like those found in medicines, cosmetics, food products, paints, cements, and pollutants.” A year later in 1984, the organisation managed to create a new standard for measuring electrical voltage that was more accurate, stable, and easier to use than those that had previously existed. The new measurement was based on the Josephson effect, whereby voltage is created when microwave radiation is applied to two superconducting materials (materials that can conduct electricity without resistance) separated by a thin insulating layer. Because the amount of voltage is related to the frequency of the microwave radiation and well-known atomic constants, its scientists were able to reliably calculate the voltage because they knew which frequency of radiation was being used.

Enter NIST: 1988-present

No longer the National Bureau of Standards, the organisation became the National Institute of Standards and Technology in 1988. The federal government mandated a name change as part of a broader expansion in the organisation's capabilities in response to a need to dramatically enhance the competitiveness of U.S. industry. The move followed a period of offshoring in which, for example, technical capabilities such as those needed to produce computer chips were moved to growing markets such as Japan. As the legislation explains:

It is the purpose of this chapter to rename the National Bureau of Standards as the National Institute of Standards and Technology and to modernize and restructure that agency to augment its unique ability to enhance the competitiveness of American industry while maintaining its traditional function as lead national laboratory for providing the measurements, calibrations, and quality assurance techniques which underpin United States commerce, technological progress, improved product reliability and manufacturing processes, and public safety.

The new focus on industrial competitiveness, however, did not come at the expense of its work with the organs of government. NIST’s forerunner had responded to a call from a government agency to standardise lightbulbs in the early years of the 20th century, but by the 1990s the complexity of requests had naturally grown by orders of magnitude. Following a communication from the National Institute of Justice (the research division of the U.S. Department of Justice), NIST produced in 1992 the world’s first DNA profiling standard. The standard was used to ensure the accuracy of restriction fragment length polymorphism analysis, which essentially involves creating a unique 'barcode' for each individual based by extracting DNA, cutting it into fragments using special proteins, and separating each fragment by placing them in a gel and applying an electric field. The request and response drew into focus the growing gulf between similarities in process and divergences in practice from the old organisation to the new. The central difference, however, was that the former was primarily mediated by its role as a federal agency, while the latter was influenced by a constellation of social, technical, and material factors that determined what sort of work it could undertake and what sort of work it should undertake.

Yet for every new standard like DNA profiling there was a refinement or redevelopment of an existing one. NIST researcher Judah Levine developed in 1993 the NIST Internet Time Service, which allows anyone to set their computer clock to match Coordinated Universal Time. Levine said in 2022 that the service, which in 2016 was estimated to have responded to more than 16 billion requests a day, played an important role in ensuring that computers could agree about when interactions such as message receipts or stock trades took place. A common thread throughout NIST’s story, which ran from its inception until the present day, is one of reaction and response. NIST was not an island, and its priorities were shaped by the politics of the moment, requests from other federal bodies and respected groups, and by the broader technological, social and economic forces of the day.

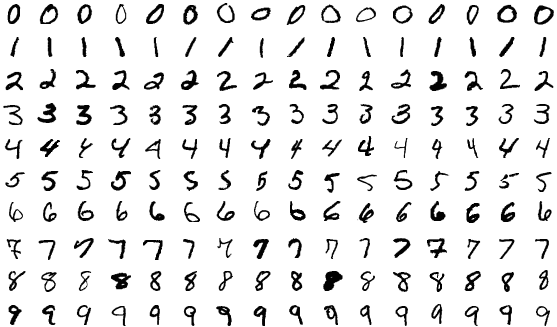

In 1994, all of these factors were in play as the organisation responded to calls for better benchmarking from the machine learning community in the wake of connectionism’s revival. (Connectionism, a form of parallel processing with roots in pattern recognition, would eventually become known as deep learning when the practice of building large, multilayered networks proved to be highly effective.) This was the context in which the organisation created two databases of handwritten digits: NIST Special Database 3 and NIST Test Data 1. The former consisted of characters written by workers at the United States Census Bureau, whereas the latter was made up of characters written by high school students. Hosting a competition whereby participants drawn from academia and industry could test their ability to successfully classify the test set, NIST hoped to encourage the development of algorithms capable of generalising across unseen (and sufficiently different) data. In practice, though, because the distribution of data in both sets was dissimilar, many of the algorithms performed poorly despite achieving error rates of less than 1 per cent on the validation sets drawn from the training data. In response, at the conclusion of the competition, researchers from Bell Labs mixed the training and test datasets to produce two new sets for testing and training. The result was the MNIST database (where the ‘m’ stands for ‘modified’), one of the most recognisable and well-known machine learning benchmarks.